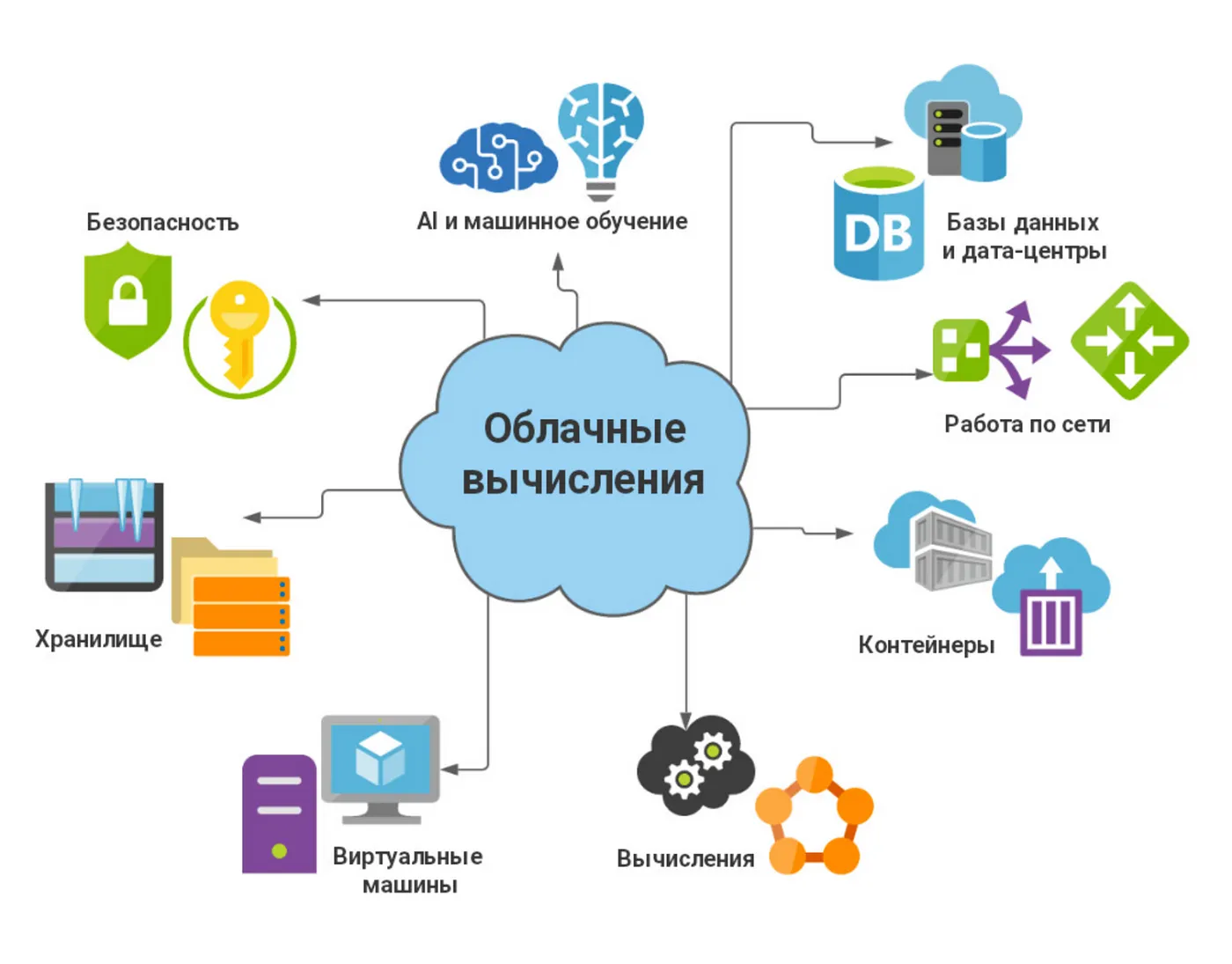

Облачные вычисления — это инфраструктура, решения, софт и ресурсы, которые провайдер предоставляет через сеть, точнее — через облако. Это широкое понятие, куда входит vCPU, базы данных, GPU или DAVM (готовые виртуальные машины для аналитики). В облачных вычислениях выделяют три основных модели предоставления услуг: IaaS (инфраструктура как сервис), PaaS (платформа как сервис) и SaaS (программное обеспечение как сервис).

Использование облачных служб (Cloud Computing Services) и облачных ресурсов помогает избежать бизнесу капитальных затрат на собственную инфраструктуру и более быстро масштабироваться. Например, создать виртуальные рабочие столы для распределенных команд, если потребуется перевести штат на удаленную работу. В этом материале мы поговорим об особенностях разных видов и моделей облачных вычислений и разберем их окупаемость.

Краткая история облачных вычислений

Термин «облачные вычисления» появился относительно недавно. Словосочетание «Cloud Computing» начали гуглить в конце 2007 года. Стоит заметить, что запрос постепенно вытеснял словосочетание «Grid Computing». Одной из первых компаний, давших миру это понятие, стала компания IBM, развернувшая в начале 2008 года сервис «Blue Cloud». Проект представлял серию «вычислительных предложений», которые позволят компаниям работать по следующей схеме: доступ будет обеспечиваться к распределенной, глобально доступной сети ресурсов, а не к локально или удаленно расположенным серверам. Идея «Blue Cloud» построена на опыте IBM в области высокопроизводительных систем, открытых стандартах и ПО с открытым исходным кодом. Чтобы понять, как технологии пришли в эту точку, рассмотрим несколько важных событий.

Концепция облачных вычислений появилась благодаря Джозефу Ликлайдеру еще в 1970 году. В эти годы он принимал активное участие в создании ARPANET (Advanced Research Projects Agency Network). Его идея заключалась в том, что каждый человек на земле будет подключен к сети, откуда он будет получать данные и программы. Другой ученый Джон Маккарти высказал идею о том, что вычислительные мощности будут предоставляться пользователям как сервис. Развитие облачных технологий забежало немного вперед, поэтому идея дожидалась, когда технологии подрастут до 90-х годов.

Расширение пропускной способности в 90-е не позволило получить прорыва в развитии, так как практически ни один провайдер не был готов к такому повороту. Сам факт ускорения сети дал импульс развитию облачных вычислений.

Одним из наиболее значимых моментов этого периода можно назвать появление Salesforce.com в 1999 году. Организация стала первой компанией, предоставившей доступ к своему приложению через интернет. Все это уже походило на прообраз первых SaaS.

Следующей вехой стала разработка облачного веб-сервиса компанией Amazon в 2002 году. Сервис позволял хранить информацию и производить вычисления. В 2006 году Amazon запустила веб-сервис под названием Elastic Compute cloud (EC2), который позволял его пользователям запускать свои собственные приложения.

В 2008 году свои планы в области облачных вычислений озвучил Microsoft — забавно, что сейчас в компании ведутся разговоры о ноутбуках без ОС. Планируется, что вся операционная система будет поставляться из облака онлайн. Причем Microsoft анонсировала не просто сервис, но полноценную облачную операционную систему Windows Azure.

Почему облачные вычисления так называются

Провайдер обладает парком серверов, расположенных в дата-центре. В зависимости от потребностей компании могут арендовать как серверы с частью ядра для микропроектов, например, Telegram-ботов, так и целые ряды стоек и разворачивать там множество виртуальных машин. Например, для работы крупного маркетплейса. Ресурсы, которые провайдер предоставляет своим клиентам, виртуализированы. В основе виртуализации лежит возможность одного компьютера выполнять работу нескольких компьютеров благодаря распределению его ресурсов по нескольким логическим средам. С помощью гипервизора такой компьютер можно нарезать на виртуальные машины, а дальше устанавливать на них необходимое ПО.

Провайдер предоставляет IaaS-услуги на базе собственного облака (например, на основе open source-решения) или облака вендора. Пользователи при этом имеют доступ к своей инфраструктуре и проектам из любой точки мира.

Как работают облачные вычисления

Главный принцип облачных вычислений — в оплате ресурсов по мере их потребления. Модель pay-as-you-go может быть реализована по-разному. Как оплата использованных ресурсов в конце месяца или как платеж в начале с возможным переходом неиспользованных средств на следующий месяц. В любом случае такая модель демонстрирует максимальную прозрачность в оплате ресурсов.

Примеры облачных вычислений

- Облачные базы данных. Облачные базы данных как сервис позволяют быстро разворачивать новые реплики и мастеры, делать их отказоустойчивыми, а также работать с API и Terraform.

- Machine Learning. Использование ML-платформ и подготовленных виртуальных машин сокращает сроки обучения и решает проблему с логированием и бэкапированием данных.

- Managed Kubernetes. Как правило, в Managed Kubernetes приходят сложные приложения с микросервисной архитектурой. Такой подход помогает наладить автохилинг контейнеров, быстрое поднятие кластеров, возможно, — с поддержкой GPU. Кроме этого, для бизнеса это хорошая возможность разделить ответственность и не тратить ресурсы на собственный штат сотрудников.

Кроме этого, есть также четвертая модель, которая пока не так популярна, но также имеет свои преимущества.

Особенности и недостатки облачных вычислений

Для крупных организаций больше характерны затраты CAPEX, поскольку это помогает увеличить капитализацию компании. В этом смысле операционные расходы на облачные вычисления не оказывают такого эффекта, поскольку инфраструктура будет арендной.

В стартапах может не оказаться необходимых ресурсов, чтобы развернуть частное облако on-premise, поэтому им выгоднее использовать ресурсы провайдеров.

Различия между облачными и выделенными серверами

Частично мы уже касались этой темы, когда говорили про возможности масштабирования, но ключевая разница все-таки в другом. Выделенные и облачные серверы по-разному относятся к ресурсам. В выделенных серверах, как в частном облаке, все мощности предназначаются только одной компании. В публичном же — провайдер размещает своих клиентов как бы в соседних слотах большого облака.

Если говорить про on-premise реализацию, то выделенные серверы можно кастомизировать и настраивать под специфические задачи.

Интеграция с бизнес-процессами и выгода для бизнеса

Облачные вычисления легко масштабировать, поэтому это качество можно использовать в самых разных областях. Например, если у бизнеса появилась потребность внедрить какие-либо ML-практики, то первые тренировки модели проходят на своем железе — здесь пока не нужен масштаб, важно валидировать гипотезы и понять, в каком направлении вообще стоит двигаться. Если базовая гипотеза верна, то далее имеет смысл или закупать оборудование, или арендовать вычислительные мощности у провайдера.

Другой аспект интеграции с бизнес-процессами — резервирование ресурсов. Например, бизнес имеет сезонную специфику и знает об этом из исторических данных. Поэтому каждый год перед всплеском нагрузки резервирует определенные мощности, чтобы сервисы компании продолжили корректную работу.

Низкие затраты на миграцию

В связи с тем, что облачные серверы проще развернуть, чем выделенные, процесс миграции от глобального провайдера будет более плавным и дешевым, а главное — займет меньше времени. Под миграцией здесь имеется в виду не только развертывание серверов, но и настройка их связности. Пользуясь услугами провайдера, есть также возможность сэкономить на обслуживании и создании условий для работы серверов.

Почему некоторым сложно доверять сервисам в облаке

Когда серверы стоят в подвале здания — с ними все понятно. Данные можно почти потрогать, но когда бизнес переходит в облако — здесь появляются вопросы.

Для некоторых компаний миграция в облако означает, что они утрачивают контроль не только над частью инфраструктуры, но и над данными. Так часто происходит из-за непрозрачного деления зон ответственности между клиентами и провайдером.

Например, такие сложности могут возникнуть в зоне информационной безопасности и обеспечения отказоустойчивости.

Типы облачных сервисов

Облачные серверы

Так называют один сервер или совокупность виртуализированных серверов, которые предоставляют свои ресурсы по сети. С их помощью можно хранить данные или вести ML-вычисления, организовать работу удаленных сотрудников или мониторить инфраструктуру.

Облачные серверы могут быстро изменять конфигурацию и масштабироваться по ситуации. В этом их ключевое отличие от выделенных серверов.

В Servercore можно получить готовый облачный сервер за пару минут. Узнать больше.

Есть четыре основных способа реализации облачной инфраструктуры:

- Публичное облако. Публичное облако всегда реализуется на стороне провайдера услуги. Каждый клиент может создавать виртуальные машины без ограничений, но при этом не имеет физического доступа к серверам. Комплектующие можно поменять в любой момент. Это полезно, когда меняется нагрузка, — легко можно добавить или убрать RAM или vCPU. Провайдер подготавливает инфраструктуру вплоть до гостевых операционных систем, поэтому что-либо кастомизировать на более глубоких уровнях нельзя.

- Частное облако. Есть два варианта реализации частного облака: на базе провайдера и on-premise, то есть на собственном оборудовании. Ключевая особенность — все ресурсы принадлежат одному заказчику. Это решение подходит для компаний, которым нужно устанавливать свои операционные системы и оборудование, например, межсетевые экраны.

Отношения между публичным и частным облаком:

3. Гибридное облако. В такой модели соединяется часть клиентской инфраструктуры (on-premise) и инфраструктура, которую предоставляет провайдер. Компании получают преимущества (сертификаты безопасности, аттестацию и т. д.) и ресурсы провайдеров, но при этом продолжают использовать свои мощности.

4. Мультиоблако. Инфраструктура проекта состоит из нескольких облаков разных провайдеров. Обычно используется для создания отказоустойчивых сервисов или оптимизации бюджета. Например, основной проект может быть развернут у одного провайдера, а хранилище данных для обучения ML-моделей у другого поскольку так дешевле даже с учетом более сложной интеграции.

Объектное хранилище

Сервис предназначен для хранения неструктурированных и полуструктурированных данных (например, в алфавитном порядке). Хранилище легко масштабируется и может «накапливать» миллиарды объектов — это важный момент, например, при построении ML-моделей. Другой момент — интеграция хранилища в инфраструктуру проекта. Из must have современных сервисов здесь стоит обращать внимание на то, предлагает ли провайдер S3-репликацию и протоколы FTP/FTPS, SFTP.

Бэкапы и BaaS

Есть популярное заблуждение, что вместе с хостингом компании получают от провайдера бэкапирование своей инфраструктуры. Стоит заметить, что создание Backup и Backup-as-a-Service — это разные вещи. BaaS — отдельный облачный сервис, который помогает выстроить процесс и планировать ресурсы. Для того, чтобы создавать резервные копии, нужно место в хранилище, физическое пространство для бэкап-серверов, специализированное ПО и время администраторов. Чем больше объем данных, тем больше затраты. Поэтому компании часто выбирают облачное решение, чтобы организовать эту работу «под ключ».

Например, с помощью BaaS можно настроить бэкапы по расписанию, а комбинация методов восстановления данных поможет организовать работу инфраструктуры без заметных простоев.

Облачные базы данных

Кроме самых известных решений, вроде MySQL и PostgreSQL провайдеры, как правило, предоставляют еще ряд возможностей для организации работы с данными. Провайдер берет на себя подбор оптимального железа и его настройку. Развертывание кластеров и определение инструкций на случай потери мастера. Кто-то в этом случае идет по пути создания нового мастера из какой-либо реплики, выбранной кворумом, кто-то — поднимает мастер, поскольку это может занять меньше времени, чем переливание данных.

География серверов

Провайдеры облачных услуг могут иметь точки присутствия по всему миру. Чтобы обеспечивать быструю загрузку данных для пользователей из конкретного региона, компании используют CDN и стараются строить инфраструктуру в ближайшем хабе. Это оправданная тактика, поскольку эти шаги позволяют снизить количество отказов и более эффективно продвигаться на местных рынках.

Геораспределенность данных также влияет на отказоустойчивость инфраструктуры. Если в одном дата-центре случилась авария, но при этом данные проекта были распределены — сервис продолжит работу полностью или частично. Полное отключение дата-центра — маловероятный сценарий, но разделение серверов таким способом закрывает проблему.

Регион облака и зоны доступности

Регион — инсталляция инфраструктуры, которая расположена в отдельном дата-центре. У каждого региона свои вычислительные ресурсы, собственные сети, API, а иногда и вендоры стоек. Для управления регионами используются общий клиент авторизации и веб-интерфейс. Хосты виртуализации и хранения данных в регионе объединяются в меньшую сущность — в зоны доступности. Зоны могут быть разделены по разным принципам и признакам. Например, по сертификации или мощности конфигураций.

Зонами доступности обычно называют сегменты внутри дата-центров. Например, у провайдера шесть дата-центров в разных городах страны. При этом часть услуг может быть доступна только в одном. Обычно такие вещи касаются каких-либо аттестованных сегментов, которые подходят для размещения чувствительных данных.

Каждая такая зона изолирована от аппаратных и программных сбоев в других зонах доступности. Чтобы обеспечить отказоустойчивость и обезопасить инфраструктуру от потери данных, компании выбирают размещение в нескольких зонах доступности.

Энергоэффективность облака

Дата-центр на 200 стоек потребляет объем энергии, сравнимый с городом-миллионником. Это важная часть городской инфраструктуры, поэтому любые изменения в этой области связаны с большим количеством рисков.

С одной стороны, сейчас компании пытаются минимизировать углеродный след и сделать свое производство зеленым. С другой — многие дата-центры изначально размещались в помещениях, которые не были предназначены для работы с большими нагрузками. Таким площадкам с каждым годом все сложнее поддерживать парк серверов. Облачные провайдеры поэтапно увеличивают удельную мощность (power density), организуя пространство с большей вместимостью стоек и плотностью серверов. Когда строительством и поддержкой дата-центров занимается сам провайдер — это большой плюс. Провайдер знает специфику работы со стойками, поэтому может правильно спроектировать залы и серверные.

Поддерживать дата-центр своими силами часто просто невозможно из-за отсутствия необходимого помещения. Дорого прокладывать коммуникации, плюс оплата электроэнергии становится неприятной графой расходов. Эти задачи проще передать провайдеру, а ресурсы инвестировать в команду или инфраструктуру.

Заключение

Доля компаний, которые используют облачные вычисления, растет каждый год. Тенденция, несомненно, сохранится и в будущем. Облачные сервисы полностью показали свой потенциал в период пандемии COVID-19, поэтому сейчас заслужили особый статус. Это закономерный процесс, у которого есть несколько триггеров.

- Провайдеры научились проводить аттестацию облаков, чтобы соответствовать правилам обработки данных локальных регуляторов.

- Отсутствие капитальных затрат помогает снизить порог входа и строить инфраструктуру по кирпичикам, постепенно масштабируя важные секторы. Заказывать комплектующие или целые выделенные серверы в таком режиме проблематично.

- Высокая скорость развертывания помогает тестировать гипотезы и работать с разными нагрузками в удобной среде.

Чем может помочь Servercore

Servercore предоставляет инфраструктуру и набор PaaS-решений, чтобы бизнес мог эффективно развиваться и строить конкурентные приложения. Сильная стороны компании — команда с 14 -летним опытом создания отказоустойчивых систем по всему миру. Servercore предоставляет базовую защиту от DDoS-атак и фиксирует качество услуг в договоре SLA.

Servercore помогает компаниям развиваться в комфортной среде, используя современные решения и подходы к построению инфраструктуры. Команда проекта стремится сделать работу с серверами интуитивно понятной, поэтому уделяет внимание работе с панелью управления. Получить готовый к работе облачный сервер можно всего за несколько минут. Также доступна бесплатная квалифицированная техподдержка 24/7, которая поможет с любым вопросом.

Servercore учитывает специфику локального бизнеса — биллинг производится в местной валюте по модели оплаты за потребляемые ресурсы (pay-as-you-go). Дата-центры провайдера имеют сертификацию PCI DSS и соответствуют международным и локальным стандартам в области хранения и обработки данных — GDPR, № 94-V (Казахстан), ЗРУ-547-сон (Узбекистан), Data Protection Act (Кения).

+7 (727) 344-27-06

+7 (727) 344-27-06  +998 (71) 205-16-83

+998 (71) 205-16-83