A decade ago, even the largest corporations were developing monolithic applications, barely considering that there might be alternatives thanks to Managed Kubernetes. The IT solutions market was rapidly evolving, and competition was sidelining services that lacked quick scalability and stability. What’s the point of unique features if they can’t be utilized at one’s convenience?

Failures and crashes of services harm the reputation of any business. Clearly, the issue isn’t always about workload or code quality, the problem lies deeper — in the architecture itself. Therefore, in recent years, the monolith has been progressively losing its popularity to the microservice architecture. We will share how Kubernetes has helped businesses and automated the handling of containers.

Microservices became widely used when the client-server architecture started evolving based on distribution system principles. The latter ones differ from the traditional client-server model as they have multiple applications operating on different but interconnected nodes.

Microservices themselves didn’t act as a magic solution to problems. For instance, authorization errors could result in service unavailability, but this is an issue of a single component, not the entire application — thus Damage Control was introduced. So, are monoliths outdated and is there a universal need for microservices? Actually, not quite.

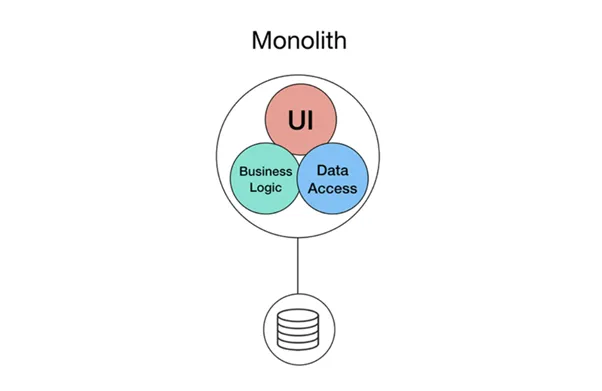

Features of Monolithic Architecture

The architecture selection is influenced not only by the project objectives, but also by the size of the team. For a team of 2–5 members, it’s more convenient to work with a monolith. Why is that?

● Operating within a single application environment solves issues with logging and security.

● There’s no need to focus as much on component interconnectivity as with a microservice setup.

● Easy to monitor IT infrastructure status.

● Integration is simpler and less costly, eliminating the need for a DevOps specialist in the team.

When introducing a new product, a monolith is a more logical choice, as the code base might not be extensive. There’s no point in breaking down individual segments into microservices. Meanwhile, not all large-scale projects are eager to break down their monolith solutions. For many, horizontally scaling the infrastructure is more effective than overhauling all services.

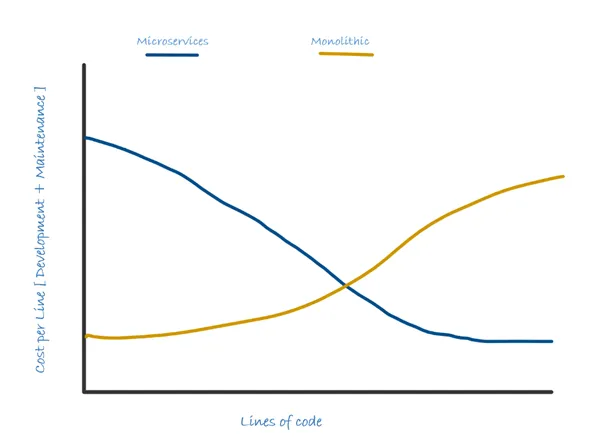

The chart illustrates the estimated cost ratio of code lines throughout the project’s progression. Two trends should be highlighted:

● The bigger the code base in the monolith, the more costly the solution becomes.

● The more code there is in microservices, the less it costs.

Monolith Is Not Fatal

Breaking down a monolith into microservices is a demanding task, but it can be split up and tackled step by step. It’s crucial to determine the type of project architecture, depending on its business needs. The process is similar to repotting houseplants: when the plant outgrows its pot, it’s best to split it into several smaller ones. This way, the business will grow even faster.

Netflix was one of the first major companies to adopt a microservice architecture. Back in 2009, the company decided that this tech solution would propel them to the forefront of streaming platforms. In 2015, their team of DevOps engineers even received a JAX Special Jury Award for implementing a solution that allowed up to a thousand deployments per day.

The shift from monolith to microservices typically follows two scenarios — the Big Bang approach and gradual refactoring:

● In the Big Bang scenario, the entire team concentrates on refactoring. New feature releases are put on hold during this time.

● Gradual refactoring requires developers to roll out new features as components of the microservice architecture. These components must communicate with the monolith through API interfaces. At this point, no new code is integrated into the monolith itself. As the application is reworked, the monolith’s functionality is gradually handed over to the microservices.

If a team chooses a refactoring approach, they should take into account the following aspects:

● Determining which business components should be extracted from the monolith and re-released as microservices.

● Figuring out how to break down databases and applications.

● Understanding how to test the dependencies of the newly created application microservices.

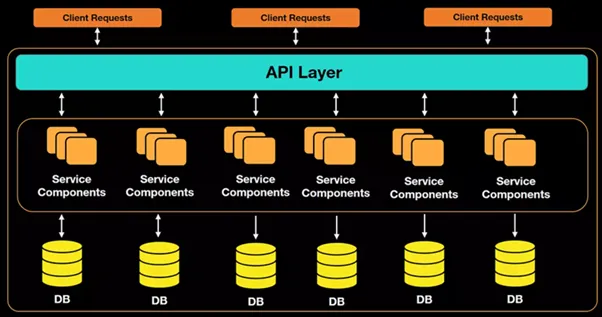

How Microservice Architecture Functions and the Importance of Containers

The fundamental principle of the architecture is that each microservice is designed to solve a specific business need. For instance, handling payments for online purchases. It can be independently tested, deployed, or updated. It will not interfere with the operations of other components. Provided that it is correctly configured. There are always unexpected elements in the actual implementation. Such division results in improved fault tolerance compared to a monolith.

Packaging microservices into containers marked a significant step in the evolution of web technologies. There’s no longer a need to maintain language consistency, so each microservice can be written in the language that best suits its business logic.

Each individual container encompasses code, system tools, the development environment, and necessary libraries. This resolves numerous issues, but the key point is the isolation of the containers.

The distinction from a monolith can be illustrated with the analogy of toy blocks. In microservices, a castle is constructed from containers of identical shapes. They can be added at any moment if the load on the castle (project) escalates, or can be updated without the user noticing the change. The monolith is more similar to a Jenga tower. It’s easier to remove blocks, but the risks are higher.

For instance, if a business is seasonal, the client volume can overload the IT infrastructure. Heavy workload can also occur during sales and promotional events. A resource failure at such a moment will erase all success for a crucial financial period.

Under heavy workload, a container may “burn out”, and this is expected. To restore functionality, a simple replacement from the copy is sufficient.

The Early Days of Kubernetes

The container concept suggests that the replacement of “burned-out” containers can be automated. Therefore, the service won’t be idle as new containers will continuously replace the old ones. The only issue lies in manually managing such a system. To bypass routine configurations, various container orchestration systems have begun to emerge in the market, allowing for their management and debugging of operational scenarios.

Orchestrators help establish dependencies between containers and deploy them without any hocus-pocus. Kubernetes has emerged as one of the most popular solutions, and most importantly, it’s approved by CNCF. The CNCF team consistently tracks tools that help reveal the key principles of cloud technologies and foster projects within the Cloud Native concept.

Over a span of 9 years, Kubernetes has evolved from a private solution and a team of Google developers to an industry standard. The solution has turned out to be beneficial for both developers and the business sector. Nevertheless, there are some less apparent pitfalls:

● It’s challenging to find skilled professionals.

● Not all can afford to sustain their own department of DevOps engineers.

Without staff possessing the necessary experience, deploying like Netflix becomes a challenge. The solution lies in ready-to-use Kubernetes clusters. A PaaS solution that automates essential tasks related to operational support, enabling businesses to concentrate on releasing new features or refactoring.

Kubernetes as a Managed Service

The foundation of any Managed service is based on the principle of delegation. Businesses gain resources to work with the product, can focus on customer needs, and delegate infrastructure tasks to the provider.

The benefits of Managed Kubernetes in Servercore:

● Businesses can save resources by not having to deploy and maintain the cluster themselves. The team has accumulated the necessary experience over 11 years to deliver a reliable solution.

● It offers medium and large businesses the chance to establish a fault-tolerant platform for operating in various clouds, with diverse code and OS. Servercore enables unlimited scaling of computing power.

● The provider ensures the IT infrastructure’s availability and keeps the Kubernetes versions up to date.

● In Servercore, Kubernetes can be set up as a solution with cluster auto-healing. This allows for the automatic replacement of “burned-out” containers, preventing downtime.

● Through the Servercore control panel, you can obtain a ready-made cluster in just a couple of clicks in any availability zone: Kenya (Nairobi), Kazakhstan (Almaty), and Uzbekistan (Tashkent).

Managed Kubernetes facilitates the use of essential services for web or mobile applications. Being fully compatible with other Servercore products, this solution can be quickly deployed and utilized.

Do you have any questions? Contact us. Our specialists are available 24/7.