Introduction

Here’s how to deploy an application on a rented server, set up nginx, code deployment, and Docker.

This article will cover deploying a React application, renting a cloud server, and setting up nginx. Here’s the essential toolkit for a frontend developer:

- Uploading the project to GitHub.

- Renting and setting up a cloud server via SSH.

- Configuring nginx to serve static files.

- Bundle Compression.

- Domain connection.

- HTTPS setup.

- Customizing Docker.

Uploading the Project to GitHub

We have basic routing and a few empty pages. There’s a simple example with a counter that displays values on button presses. Deployed on Vite.

Our focus is solely on running in dev mode and building the app itself. For Vue or Angular developers, everything will work the same. The exception is applications that require server-side rendering. The deployment algorithm differs for those applications.

The application features several buttons, displays values, and allows page navigation. This is enough to explore the deployment process. To interact with this project, it must be uploaded to any remote repository such as GitHub, GitLab, or Bitbucket. Execute git init, git add, commit – now the code is ready for further operations. Detailed information on setting up a repository can be found here.

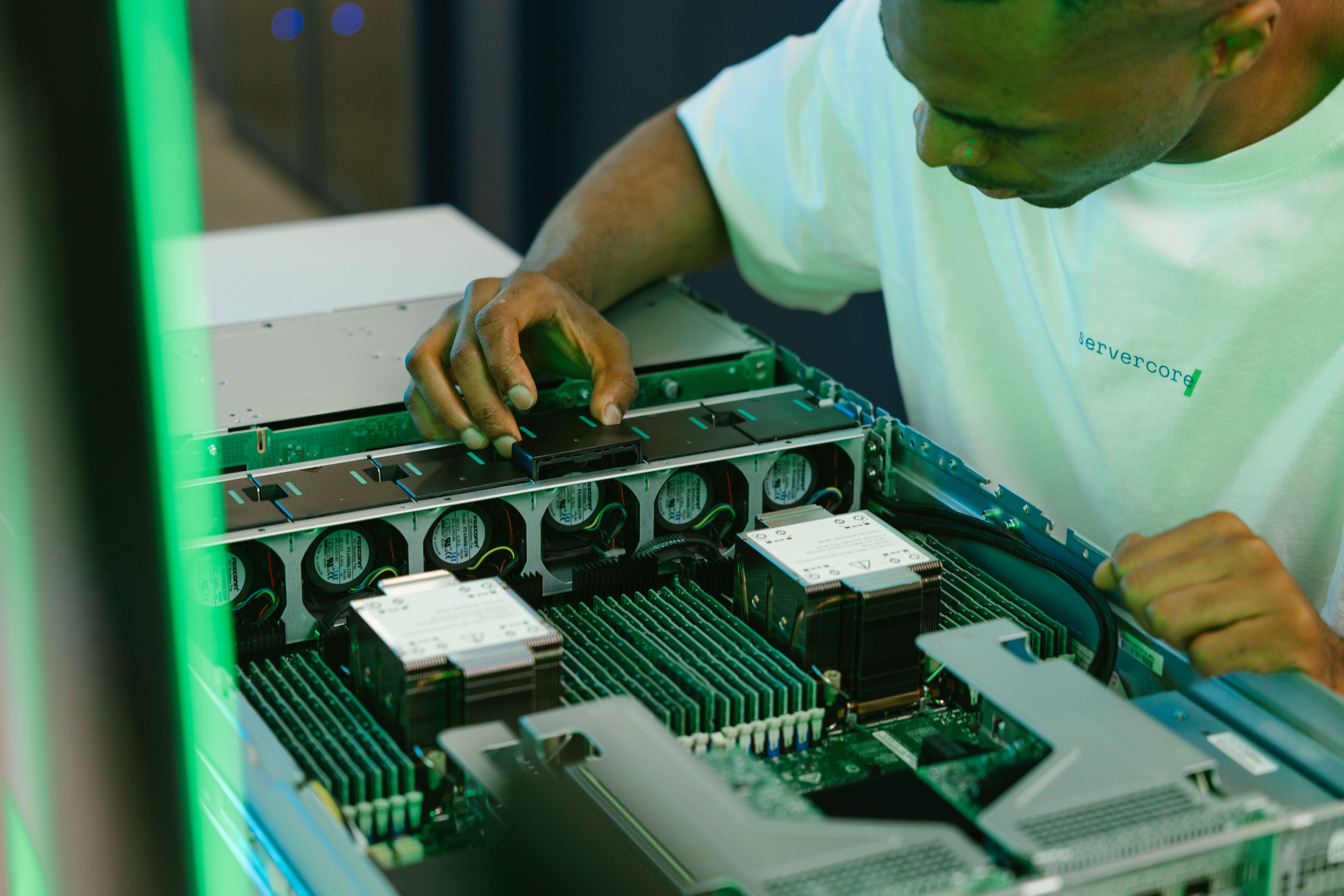

Renting and Setting up a Cloud Server via SSH

Time to rent a cloud server. Let’s use the Servercore infrastructure for this purpose. Firstly, create a project in the Cloud Platform section. Select your desired server region and click Create. A configurator will open allowing you to flexibly adjust server parameters. For instance, select an operating system of your choice.

Select for either a fixed configuration or customize your own. Set the minimum HDD size at 10 GB. You can add an optional SSD, configure networks, and set up both basic and advanced configurations. Once the server is set up, you can connect via the public IP address.

Check in the terminal if an SSH client is installed. The SSH command should yield this result.

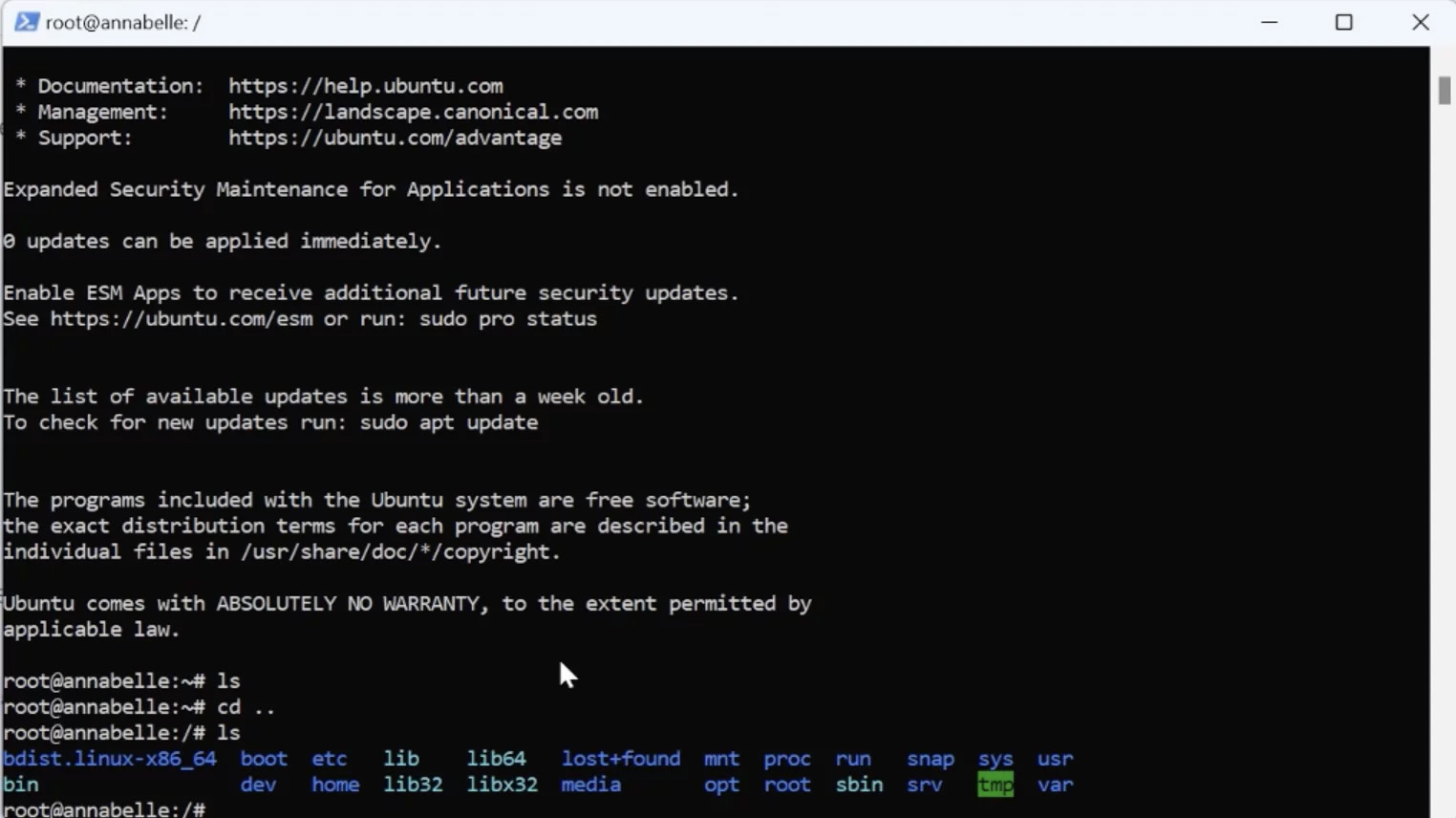

Now, let’s connect to the server using SSH. Copy the password from the control panel and proceed to the terminal. You need to provide a login. In our case, it’s “root”. Next, enter the IP address preceded by “@”. Press Enter and type in the password.

Congratulations – you’ve successfully connected to the server and now have full access to the operating system and file system.

Here, you can execute any commands available on Ubuntu, as that’s the operating system we’ve selected.

Let’s now update APT, the package management tool. This is necessary to install Node.js, Git, and Docker if needed.

Use the following command:

$ sudo dnf install git-all

After installing Git, navigate to the root of the file system and clone the repository there. Since the repository is open source, it can be easily cloned using “git clone”.

Now you have index.html, the source code, and you can install dependencies. Node Version Manager should be installed on a clean operating system of a cloud server. NVM is required to manage Node.js versions flexibly.

To make everything work, we will need to add an environment variable. Copy this line:

export NVM_DIR="$([ -z "${XDG_CONFIG_HOME-}" ] && printf %s "${HOME}/.nvm" || printf %s "${XDG_CONFIG_HOME}/nvm")"

[ -s "$NVM_DIR/nvm.sh" ] && \. "$NVM_DIR/nvm.sh"

After entering the command, NVM should start working.

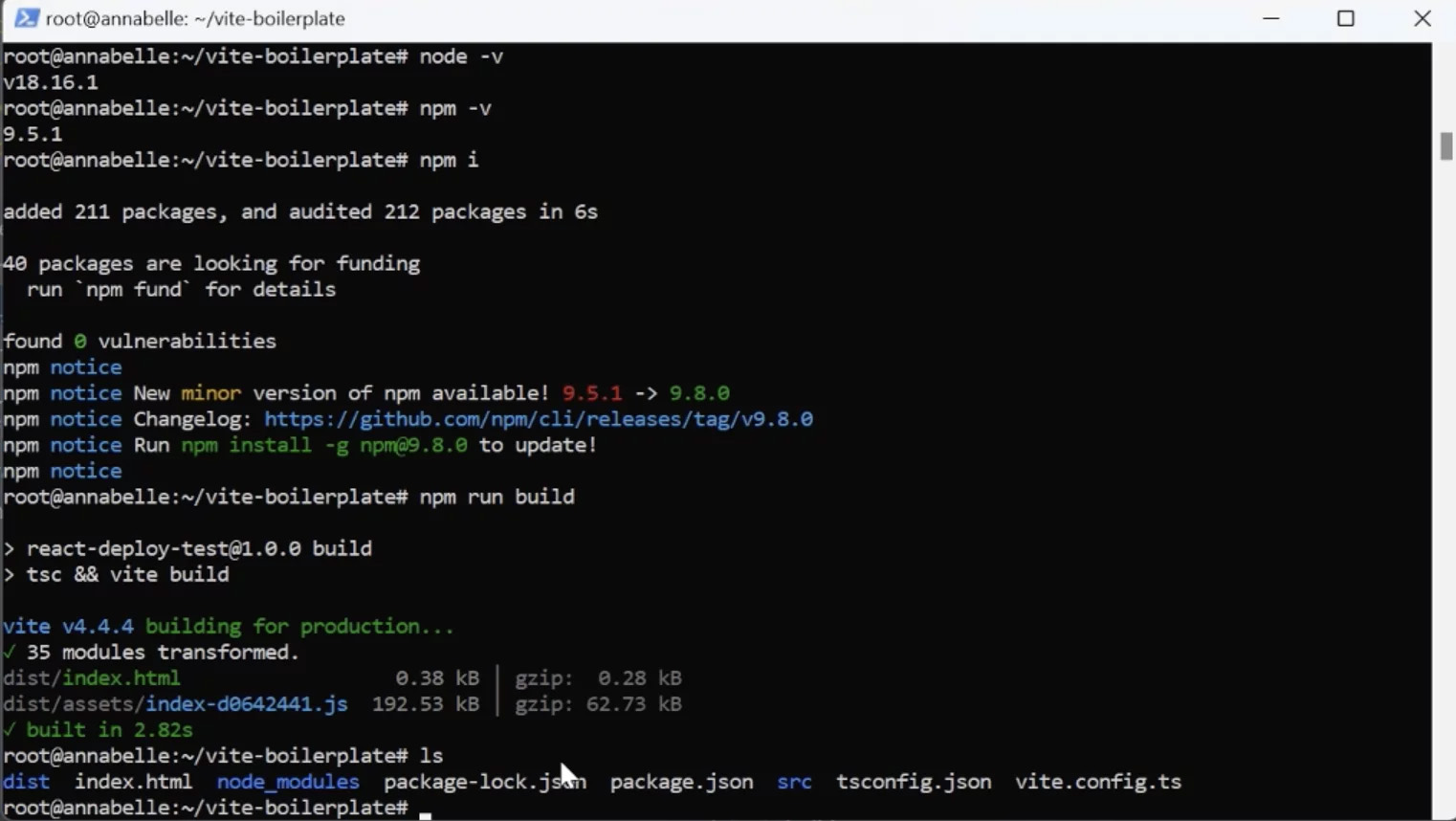

The current LTS version of Node.js is v18.16.1. Install it using NVM. To verify the installation, use this command:

node -v

Now it’s time to install the packages. Type in:

npm i

There’s a dedicated script to build the project in package.json.

Let’s delete the dist folder and attempt to run it:

npm run build

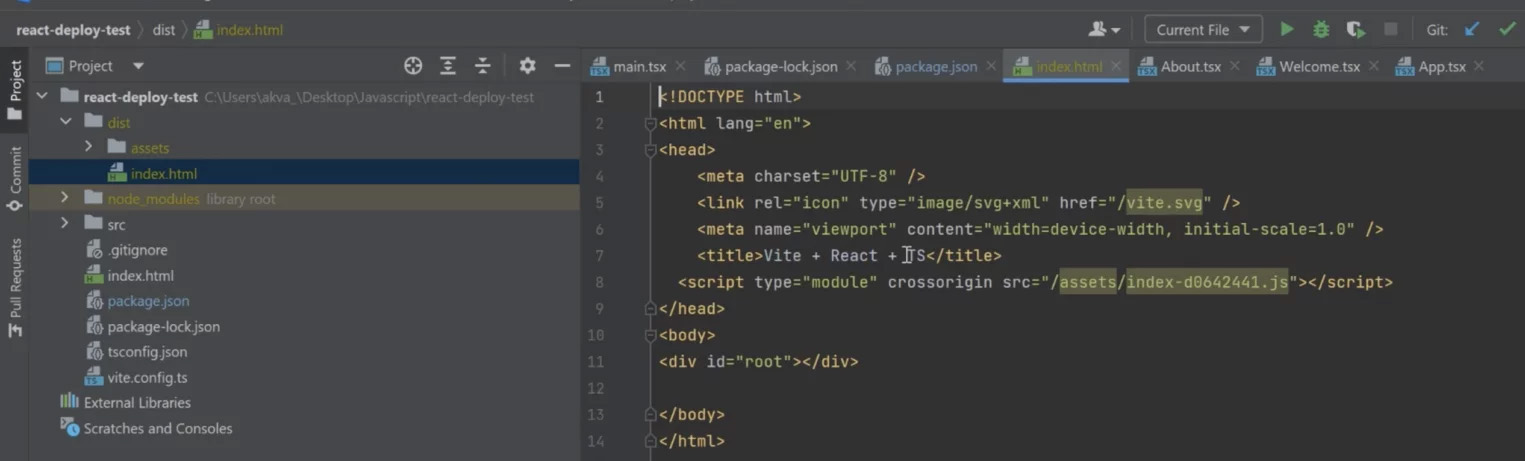

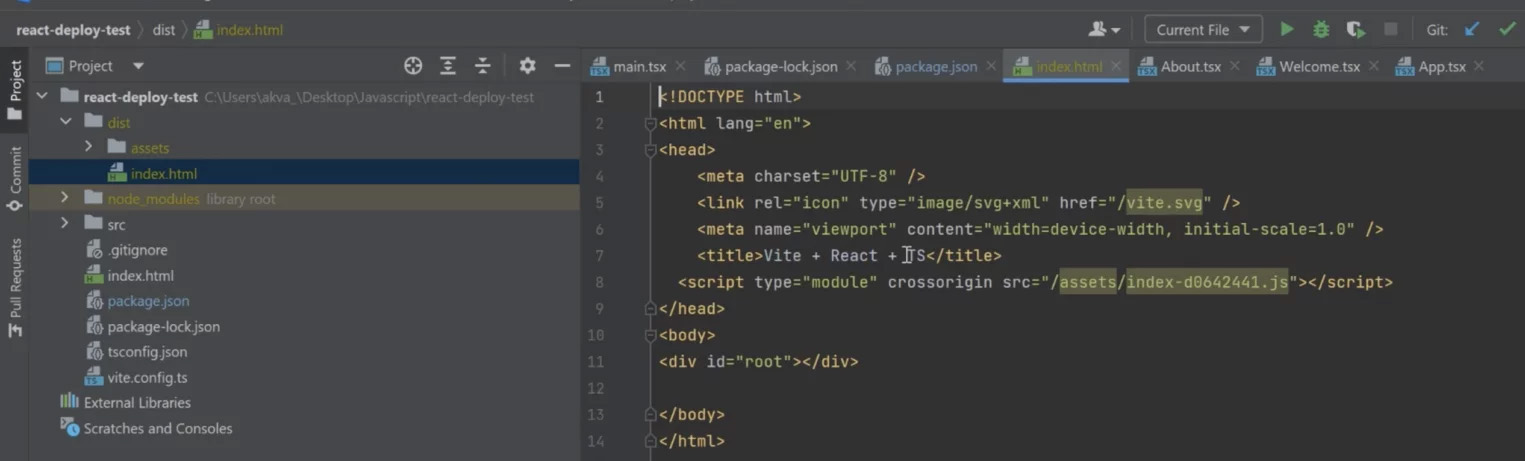

The dist folder appears – containing index.html with a connected script inside. The final item is our .js bundle, resulting from compiling Typescript code and a React counter.

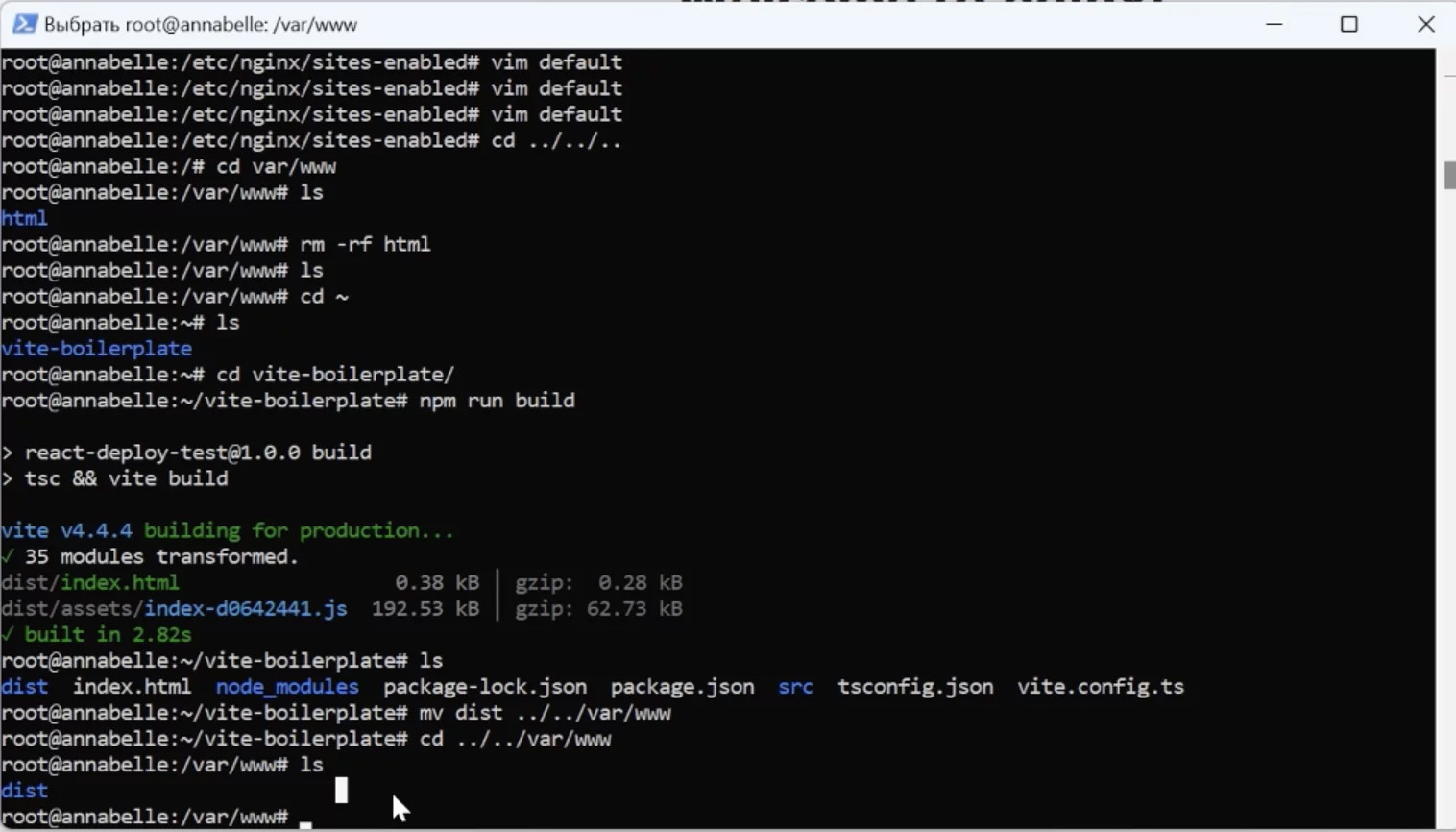

We will now deploy the same build on a remote server. Type “npm run build” and wait for the build to complete successfully. If you enter the ls command, you’ll see the dist folder containing all necessary files inside.

We need to deliver the build containing index.html to users so they can access our domain and receive these static files from the server. For this purpose, we use nginx.

The command “sudo apt update” has already been performed. Now let’s install nginx itself using APT. Copy this command:

sudo apt install nginx

Configuring nginx

Nginx is an extremely user-friendly web server. It’s very flexible, customizable, and suitable for distributing static files. It can be set up in just a few minutes to serve IMG, HTML, CSS, and JS files. As an alternative, you could use Apache. While a static server can also be set up using Node.js, nginx is currently the most popular and fastest solution for such tasks.

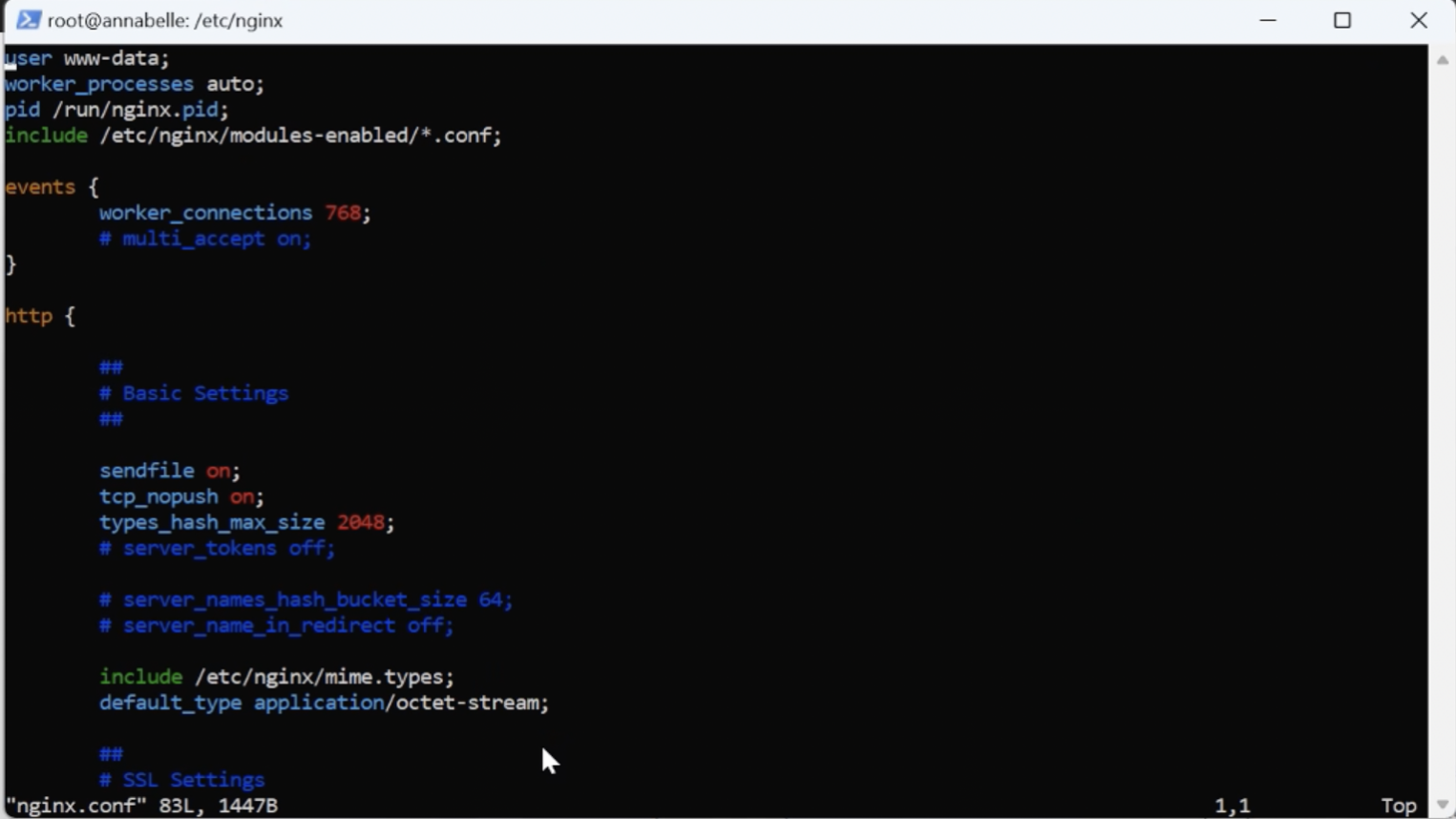

Let’s navigate to the etc folder and use the ls command to view its contents. The nginx directory will be displayed. There are many files here, but we’re interested in the configuration file.

Let’s open it with Vim and check its contents. If Vim isn’t installed by default, we should install it first to make file handling easier. Repeat the command:

vim nginx config

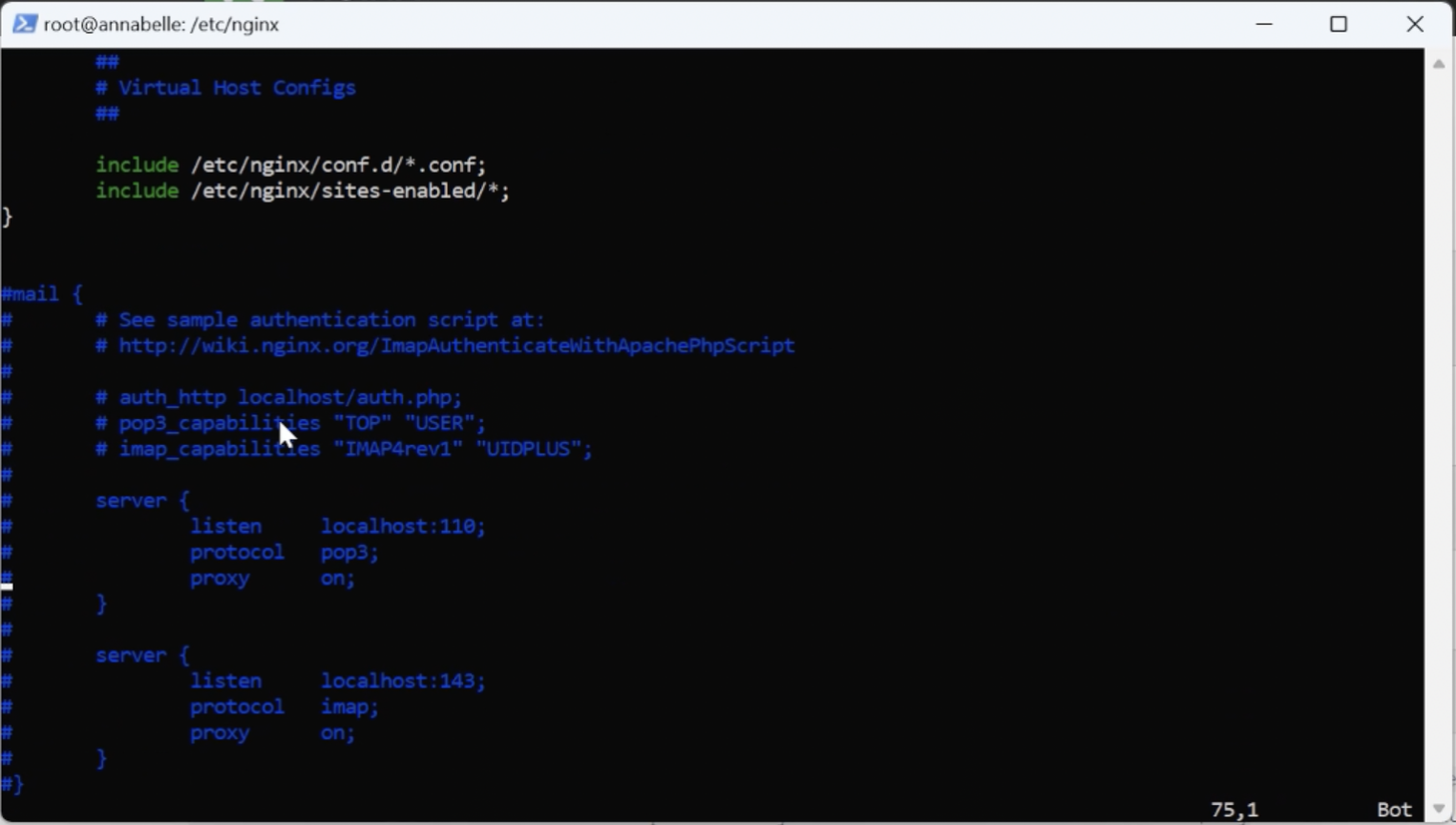

Now, focus on the two green “include” at the very bottom. These allow adding new to expand the main one.

Working with Vim for the first time can be challenging. First, exit by pressing Escape, then type :q! to quit the editor without saving.

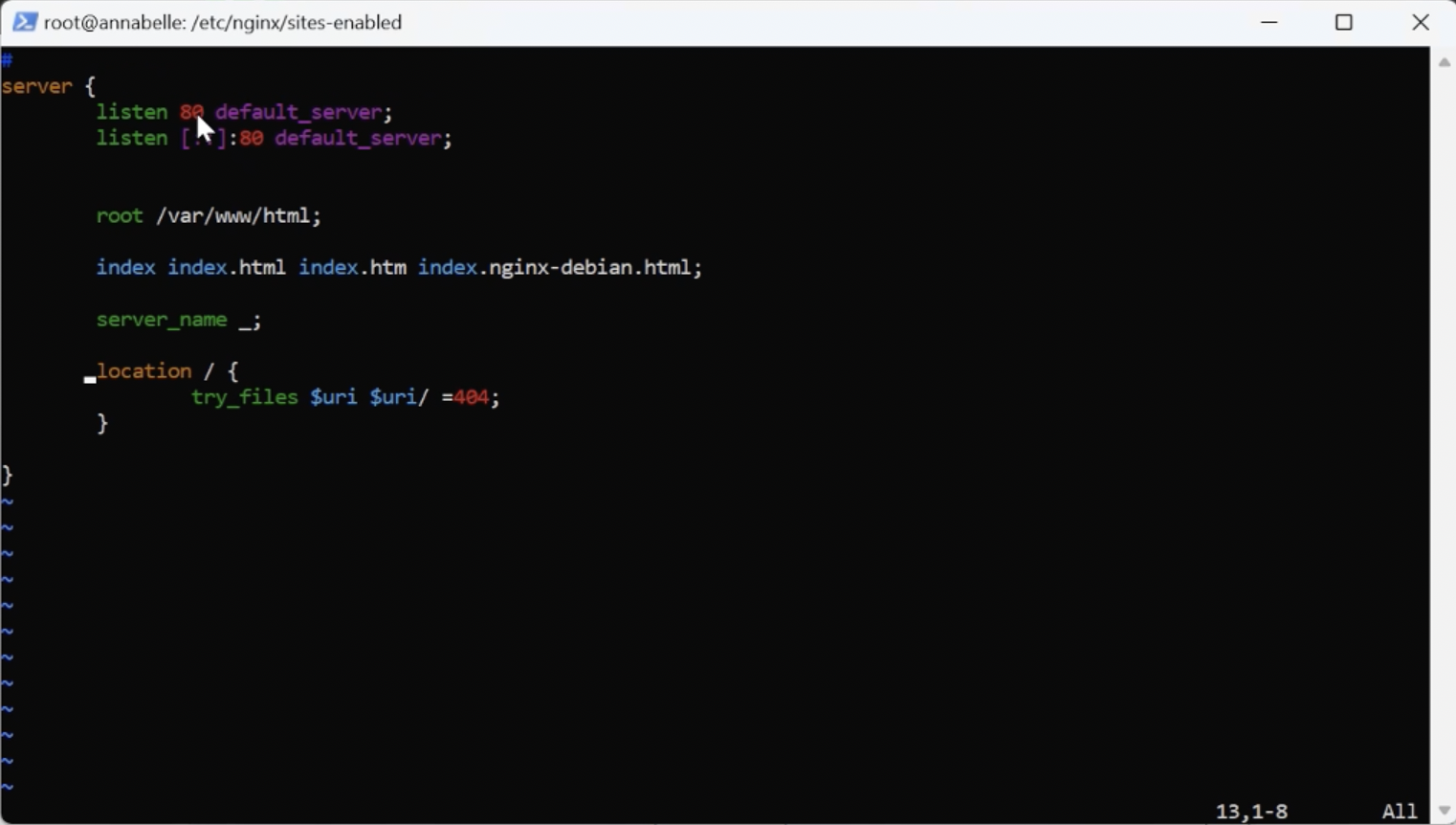

Now let’s move to the “sites enabled” folder where the include was directed. Find the “default” file and open it with Vim. There are numerous comments here that should be removed to avoid distraction.

It may look daunting, so delete all the blue hash-tagged comments. For first-timers, don’t be alarmed; there’s nothing too complicated here. Let’s review the config line by line.

The first line “listen 80” indicates that the web server will listen on port 80. For HTTP, this is the default primary port. You can specify any port here, but you’ll need to include it in the URL each time.

The next line “root /var/www/html” specifies the path to the folder containing static files like index.html, CSS files, JS files, images, etc. Next, we list the entry points. In our case, it’s index.html. Different names can be assigned here. Then “server_name” is specified – it’s not particularly interesting to us yet. The next directive is “location”, which involves URL processing. It allows for configuring redirects, proxying, and handling query parameters.

This is what a minimal config looks like for nginx to serve static files.

Now let’s save the config without any comments. To do this, press Escape followed by :wq.

Let’s try opening the application in a browser using the IP address provided by Servercore. Copy the IP, paste it into the query bar and access the default welcome nginx page.

Let’s discuss again how nginx determines which files to serve. In the var/www/html directory, specify the path to the project’s folder. Let’s change it to var/www/dist. Dist is the folder where our frontend application is compiled.

Exit Vim and save changes saved using :wq, and then navigate back several levels to the var/www folder. Inside here lies an html folder that should be deleted using the following command:

rm -rf html

Now let’s build again: npm run build. Once the build folder is ready, move it to var/www.

Specify which folder you want to move and where it should be moved to.

Inside the dist folder lies index.html. If you refresh the page now, you’ll get a “not found” message. Pay attention to the “location” directive. Since we’ve deleted the html folder, it can no longer be found, resulting in a 404 error.

To have the system read from the dist folder instead of html, restart nginx using this command:

sudo service nginx restart

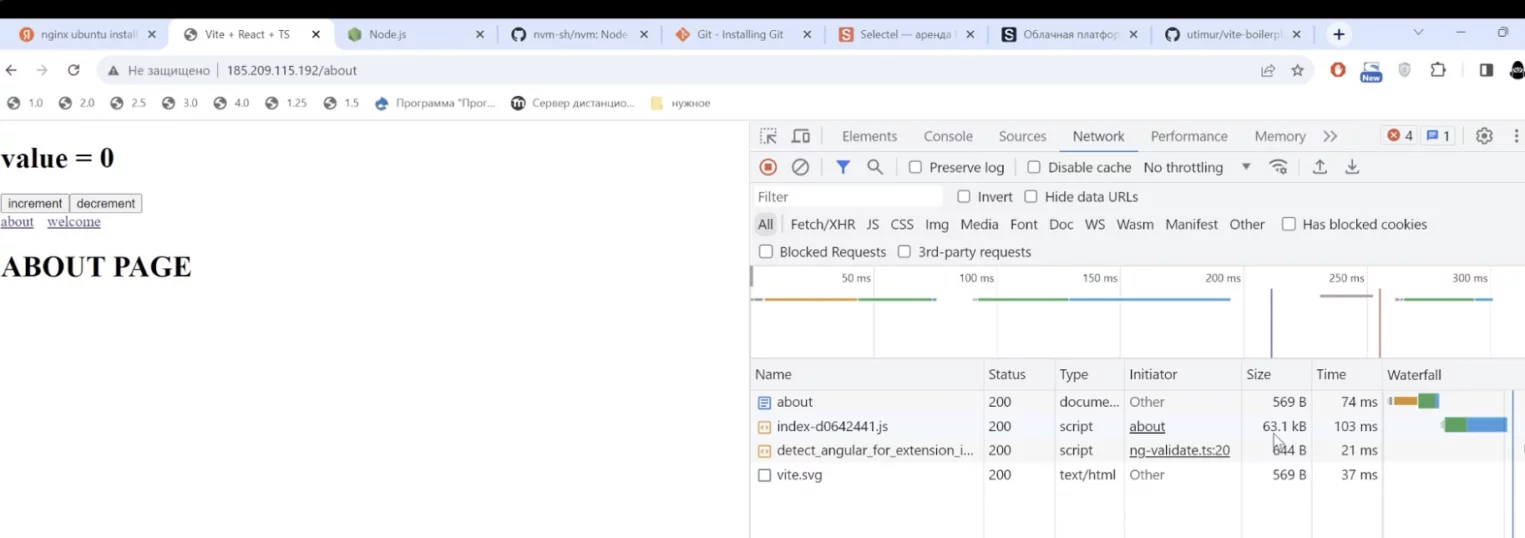

Now the application can be accessed via this IP address. The counter and pages are functioning and switching correctly. If there’s any path segment in the URL, it will start requesting files from there. It will find no files, because we only have one – index.html.

To ensure nginx always requests index.html, set up request proxying. Eliminate that 404 error and set up a redirect instead. On any path segment, it will consistently serve index.html to the user. Currently in our path section is “welcome”; refreshing the page gets everything working again.

If you create a classic multi-page application instead of a Single Page Application with many HTML files, there’s no need to set up proxying to index.html.

Halfway There: What We’ve Accomplished

- Rented a cloud server.

- Connected to it via SSH.

- Cloned the project, installed Node.js and NVM.

- Installed node modules, completed a build, and installed nginx.

- Set up static file serving and configured application building in a separate directive.

Experimented to Reinforce What We Learned

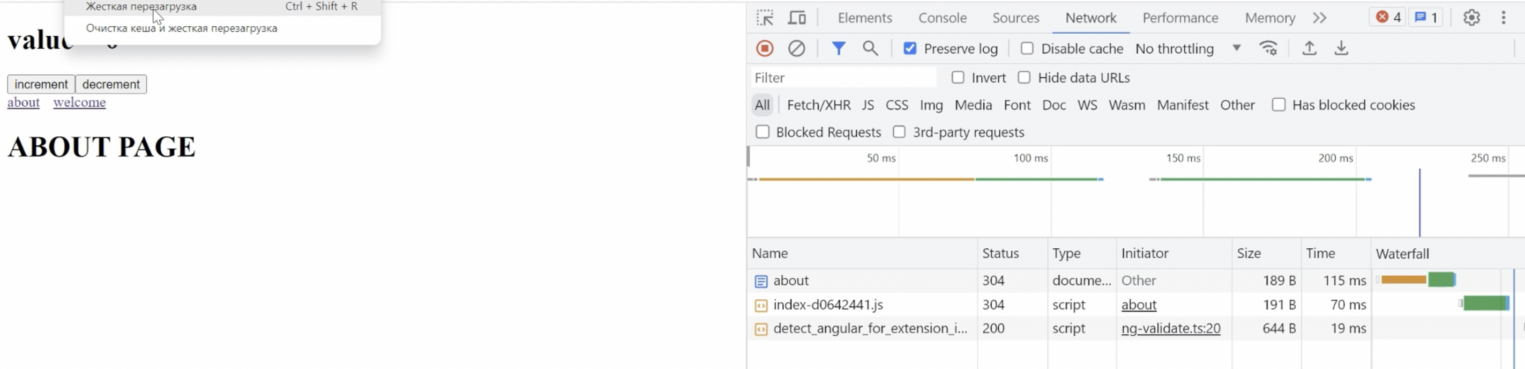

Let’s conduct an experiment. Open developer tools, go to the Network tab, and try refreshing the page.

Look at the Size column and reload with cache cleared. Notice the index bundle – this is precisely the index.js file resulting from the application build.

Bundle Compression

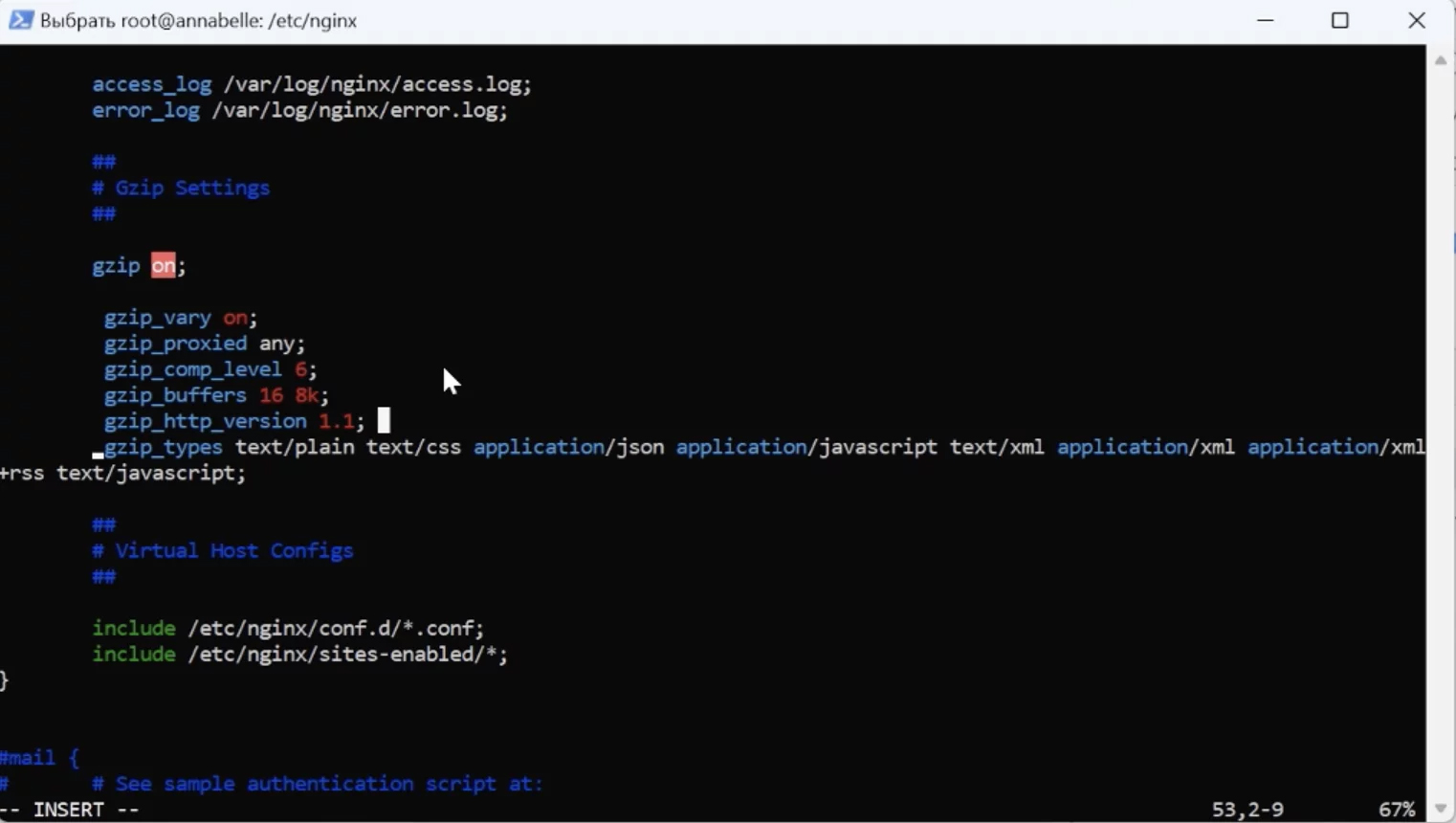

Now let’s attempt to reduce the bundle size. If the application is large and weighs around 5-7 MB, optimize it through code by splitting it into chunks, using Lazy Loading, etc. Additionally, you can optimize the size using nginx’s gzip module. For gzip to function, uncomment these lines in nginx’s root configuration:

These lines can be transferred to our configuration located in the “sites enabled” folder.

Pay speciall attention to gzip_comp_level – this determines the level of file compression. Maximum compression is nine; minimum is one. It’s crucial to find the right balance: excessive compression can damage data or processes. The more you compress, the less network traffic is used when transferring the bundle with files.

Press Escape, type :wq, and restart nginx:

sudo service nginx restart

Any changes in the nginx config should prompt a restart. Let’s reload the page with cache clearing once more. As we can see, the bundle now weighs exactly three times less: from 193 kilobytes to 63 kilobytes.

In the headers, you can see “content encoding gzip”, which means gzip has compressed index.html and decompressed it in the browser.

Connecting a Domain

The domain itself must be rented beforehand from a hosting provider. Now, let’s set it up so the server can be accessed not only by IP address but also by its domain name.

Enter any unique name for your domain – in our case, let it be vite-deploy-test. To connect, you need to specify the DNS servers that will manage the domain. Since we are hosted on Servercore, we will use its DNS servers.

Copy the domain name into the Servercore control panel and add it. Open this domain and you’ll see the addresses. Let’s return to the instructions and see how to delegate a domain.

Here, you need to transfer control to Servercore’s DNS servers. They differ only by the numbers in their names, as you can see. Specify them and let’s return to the instructions on domain delegation. Click “Connect to another hosting or service” and specify these DNS servers: ns1, ns2, ns3, and ns4.

All that remains is to link the IP address with the domain we rented. Type A resource records exist exactly for this purpose.

These specify an IP address and a domain to link them, and this must be done on the provider’s side. Add a type A resource record with just the IP address as its value. The record name is vite-deploy-test.ru. In the value, you will need to specify your IP address; copy it from your personal account.

Let’s clarify that this is indeed a type A resource record. There may be many such records, but type A specifically handles the IP address. Now all we have to do is wait for the DNS server to wake up. Once the domain starts functioning, there will be a brief waiting period.

Return to the domain, copy its name, paste it into the search bar, and open the application via the domain name. Everything should function, except that the browser will report an insecure connection because it is being accessed via HTTP. If you attempt to switch to HTTPS, you’ll encounter an error since the certificates haven’t been configured yet.

Configuring Certificates

For certificate configuration, let’s use “Let’s Encrypt” certification authority. Click “Get Started“, select HTTP server – Nginx and Ubuntu. Ubuntu 22 isn’t listed (this OS is installed on our server), but Ubuntu 20 will suffice.

Next, connect to the server via SSH; it’s necessary to install snapd. To check if snapd is installed, use this command:

snapd--version

Next, you need to uninstall the existing certbot installation. Just in case, execute this command:

sudo apt get remove certbot

The next step is to install it. Copy this command:

sudo ln -s /snap/bin/certbot /usr/bin/certbot

Certbot has been successfully installed. Navigate to the project root and execute the following command. Now you need to integrate certbot with nginx and configure it. Insert the command and answer a brief survey:

- Certbot requests an email address; enter yours.

- It then requires confirmation twice; agree both times.

- Subsequently, it will ask you to enter the domain name that you need the certificate for.

Deploying the Certificate

Now head over to nginx sites enabled with our default configuration. Additional settings will now appear. Port 443 is now being monitored – it’s the default port for HTTPS. Port 80 for classic HTTP is also monitored. You can also see lines loaded by Certbot for handling certificates. The only thing left is to pass the domain name in the server name. Input it, and then add “www.” prefix separated by a space. We’re almost finished.

Save, exit Vim, and restart nginx. There’s also a command that checks if the config is correctly filled out – I recommend executing it:

nginx -t

Switching from HTTP to HTTPS, the lock icon becomes closed – indicating that the connection is secure, certificates are valid, and that HTTPS is functioning properly.

Almost There

So far, we’ve set up the certificates, the domain name, and the cloud server. Now let’s see how to implement changes in the code. Note that the welcome page has been updated: it now outputs the ID from query parameters and request string parameters.

Navigate to the welcome page and enter the ID. Let’s ensure that nginx is processing these query parameters correctly.

Working with Changes

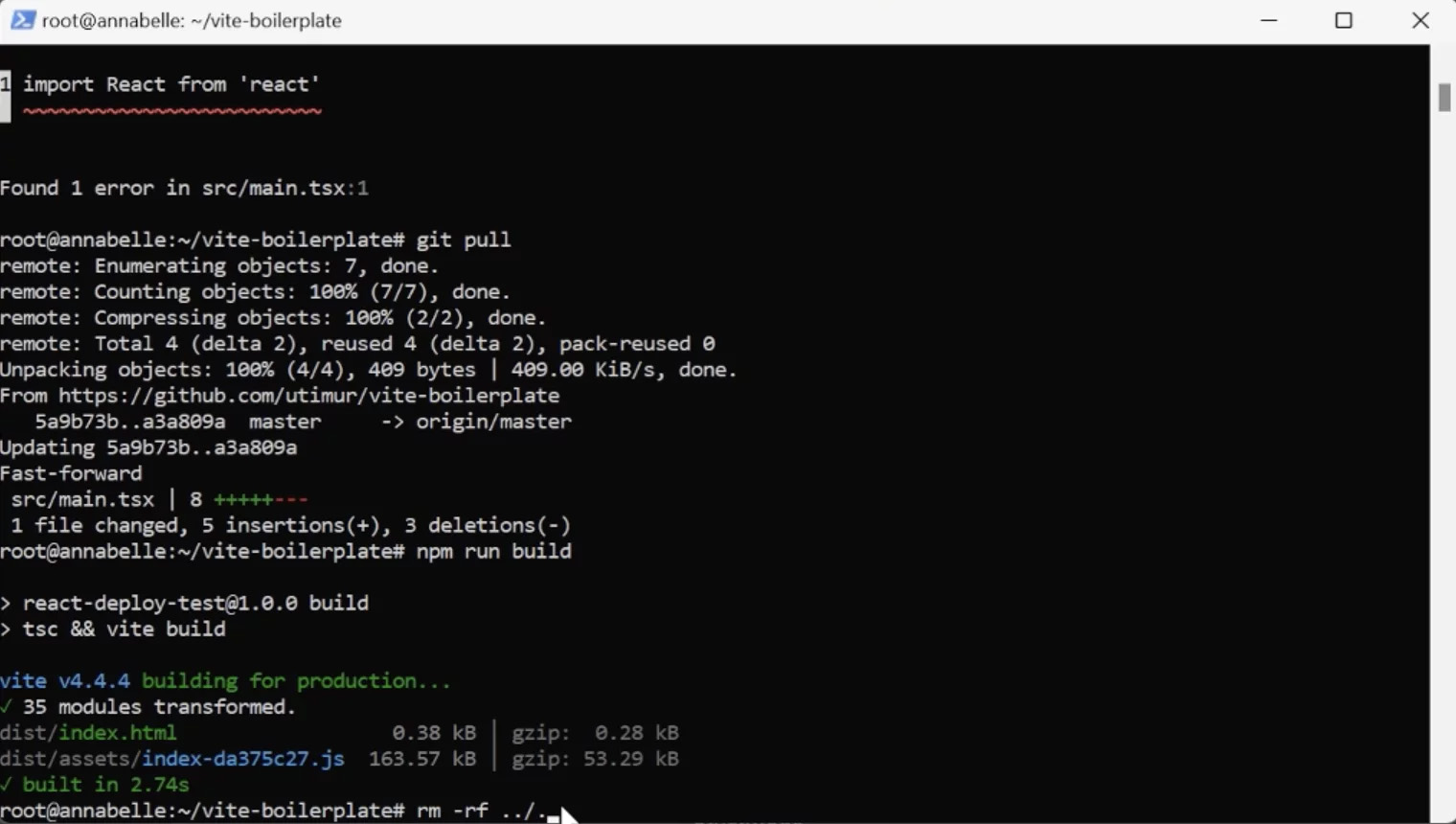

What needs to be done to deliver the new code to the server? First, it needs to be committed to GitHub. Execute git add, git commit, write a commit message, git push origin master, and push everything to the remote repository on GitHub.

Second, go to the directory where the project is located. First, connect via SSH, as the connection tends to drop after some time. Open the folder and perform a git pull. This way, we’ll pull all the changes from GitHub, from the remote repository. Rebuild from scratch:

npm run build

Remove the old build from the var/www folder and move the new build there: mv dist, and specify the path to the www folder.

Let’s attempt to open the vite-deploy-test application. Now, with the new edits, the ID has been successfully pulled. Let’s try adding a query parameter:

All that’s left is to save the nginx configuration. Navigate to the folder where it is located via this command:

cat default

Display the entire contents of the file in the terminal and copy it.

Open Webstorm, create a .nginx folder and place the nginx.conf file inside. Paste what you copied from the terminal into this location. If there are changes to the server, you can take the config with you by copying it to the appropriate folder.

Everything is set for basic scenarios and Single Page Applications. Changes are made quickly, no complex pipeline is necessary. If you need automation and encapsulation into a container, let’s proceed with setting up Docker.

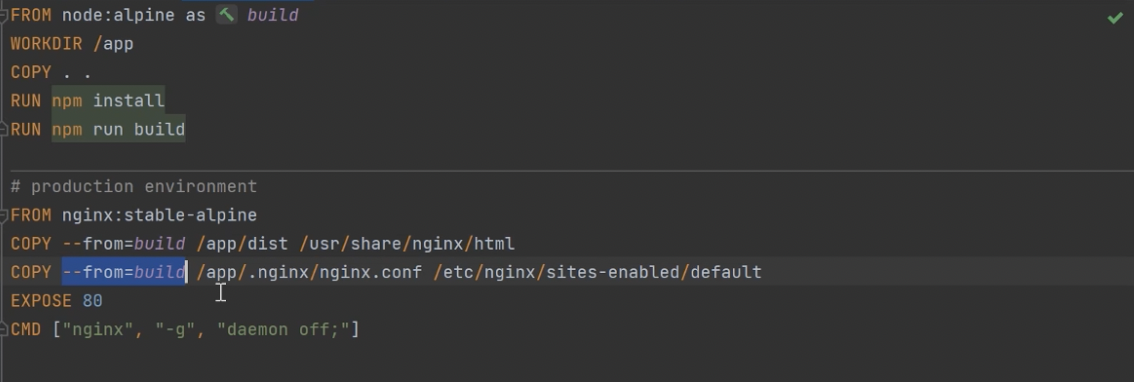

Configuring Docker

Place the configuration from the Docker file into the appropriate folder. Specify a Node.js based image in the work directory, install dependencies, perform a build, then create an nginx image. Copy the build into the folder served by nginx; move “dist” into the folder targeted by nginx. Ensure that the data in your config matches what you’ve specified here.

Finishing Touches

Repeat these steps for the config itself: move it to the nginx “sites enabled” folder. Launch nginx, and everything will function straight out of the box. At this stage, you might still need to manage ports and set up HTTPS access, but there’s nothing we haven’t covered already.

For simpler scenarios, this is overkill. Docker is required if you have unconventional pipelines or complex infrastructure and seek greater automation. But be aware that the setup will be more complex.

Conclusion

In this guide, we covered all the fundamental steps for deploying a simple frontend application and also experimented with Docker. Regardless of the complexity of the project you wish to deploy, Servercore has the appropriate configuration just for you.